We reveal AppleStorm, our investigation into how Apple AI’s eco-system quietly transmits messages (WhatsApp, iMessage) sent via Siri to Apple servers, even when it isn’t needed to complete the task.

Executive Summary

- Lumia’s Research team revealed that messages dictated via Siri, including WhatsApp and iMessage are not sent to the Private Cloud Compute. In fact, there is no assurance as to what Apple does with these messages.

- Siri transmits metadata about installed and active apps without the user’s ability to control these privacy settings.

- Audio playback metadata such as ‘recording names’, is sent without consent. No user control or visibility exists over these background data flows.

- Apple uses two distinct privacy policies (Siri vs. Apple Intelligence), meaning similar queries may fall under different data-handling rules.

TL;DR

We reveal AppleStorm, our investigation into how Apple AI’s eco-system quietly transmits messages (WhatsApp, iMessage) sent via Siri to Apple servers, even when it isn’t needed to complete the task. This happens without the user having any control whatsoever over when and what can be sent.Also more data than messages is sent to Siri’s servers. Let’s deep dive.

Introduction

Lately, Apple’s AI has been making headlines. From promising robust security measures to developing localized models that process data directly on devices, Apple has positioned itself as a champion of privacy and productivity.

How safe are these innovations? Despite the numerous advancements, recent news has highlighted critical concerns about Apple’s AI suite. A lawsuit regarding Siri eavesdropping, settled last January, raises questions about user privacy. More recently, allegations surfaced that Apple Intelligence generated false notifications, including a summary of BBC news with inaccurate information on behalf of the BBC.

When Apple launched Apple Intelligence which relied on on-device and cloud models (Private Cloud Compute) they introduced a variety of AI tools such as Writing Tools, Image Background and Siri is even more powerful with the capabilities of Apple Intelligence.

Before technologies like these get out of control, it's crucial to identify any potential security loopholes we might be overlooking. This blog explores privacy risks and unusual behaviors discovered regarding Siri and intersection of Siri and Apple Intelligence.

Apple Intelligence 101

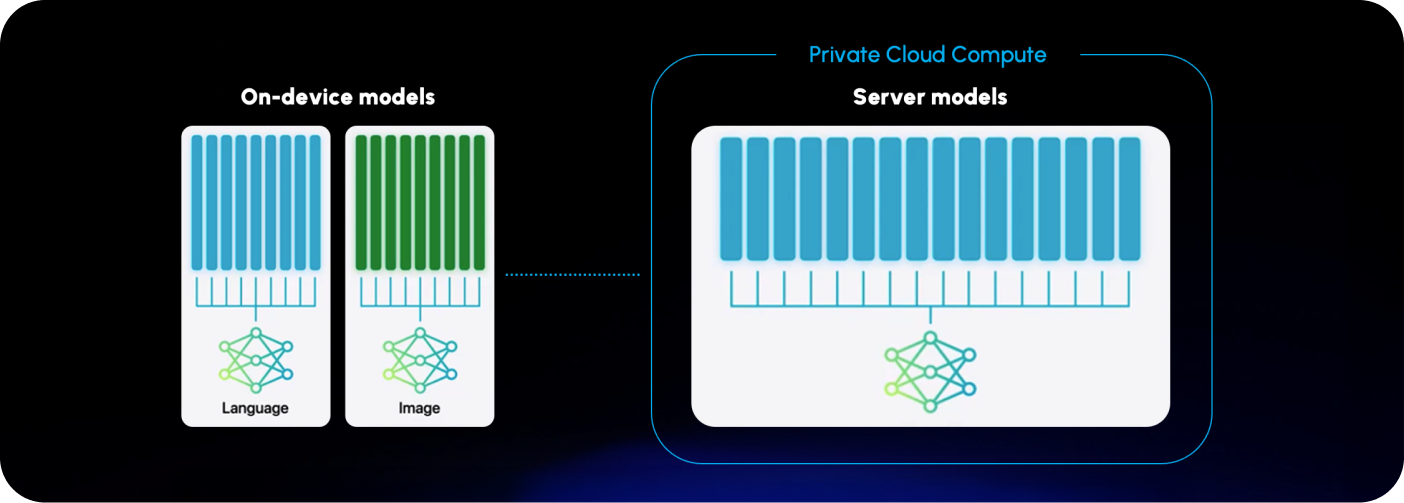

Apple Intelligence relies on a hybrid infrastructure that combines on-device processing with Apple-managed cloud servers to provide AI-driven features while maintaining strong privacy protections. The cloud component primarily operates through Private Cloud Compute (PCC).

But Siri also interacts with other Apple’s server infrastructures that are outside of the Apple Intelligence’s PCC.

The services that make up Apple’s AI apps can mainly be broken down into four groups, which are listed below. This is based on Apple’s guide for using Apple’s products on enterprise networks.

- Private Cloud Compute (PCC)

Private Cloud Compute is Apple's secure AI processing framework that extends Apple Intelligence beyond the capabilities of on-device computing.

PCC Server domains: apple-relay.cloudflare.com, apple-relay.fastly-edge.com, cp4.cloudflare.com Apple’s Private Cloud Compute architecture. - Siri’s Dictation Servers

Siri works closely with dictation servers which appear to handle voice processing tasks.

Siri’s Dication domain: guzzoni.apple.com - Siri’s Search Services

Siri integrates with Apple’s search infrastructure to deliver relevant results across devices, like Spotlight Search, Safari Smart Search, News, and Music.

Siri’s Search domain: *.smoot.apple.com - Use Case-Driven Collection

The services to communicate with Apple Intelligence’s Extensions. Today, only ChatGPT is supported Apple Intelligence’s Extensions domain: apple-relay.apple.com

Creating a Research Environment

Prerequisite

- Disabling Apple’s SIP mechanism

Operating System & Platform

- macOS Sequoia

- Apple Intelligence enabled

Tools Used

- mitmproxy – An interactive HTTPS proxy.

- Frida – A dynamic instrumentation toolkit.

What is the Weather Today?

To investigate, the open-source proxy tool mitmproxy was used to intercept Siri’s network traffic. Initially, simple prompts such as "Hello", "What can you do?" and "What is the time right now?" were tested, but no network activity was observed, suggesting that these queries could be handled locally without requiring external communication.

Since some queries might be handled locally, it was necessary to issue a prompt that would require external evaluation. Testing the query "What is the weather today?" resulted in the transmission of two packets.

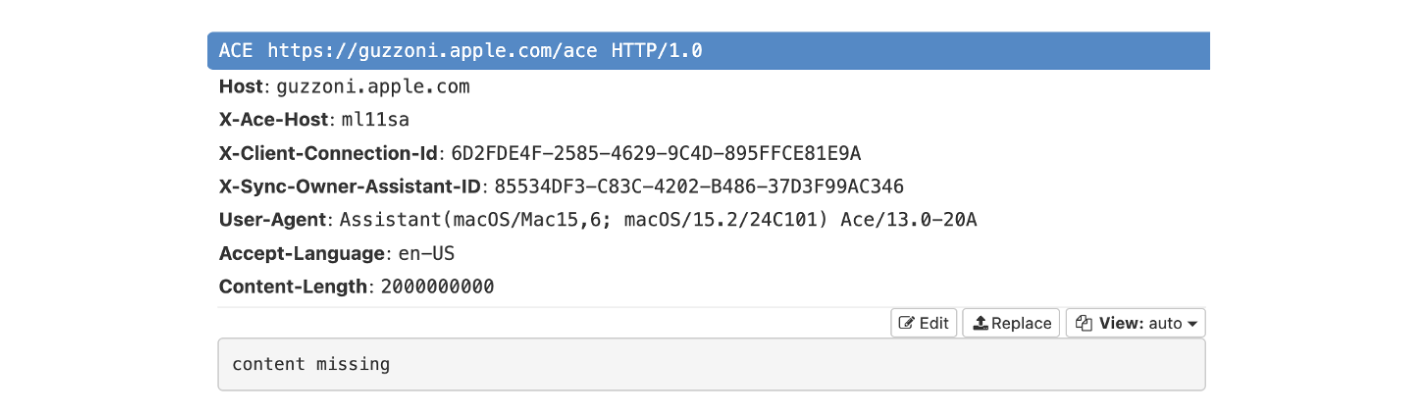

The first packet was directed to guzzoni.apple.com, identified as Apple's dictation server, but we can’t see any content.

The second packet, sent to api-glb-aeun1a.smoot.apple.com, the search service for Siri. Let’s start with this one.

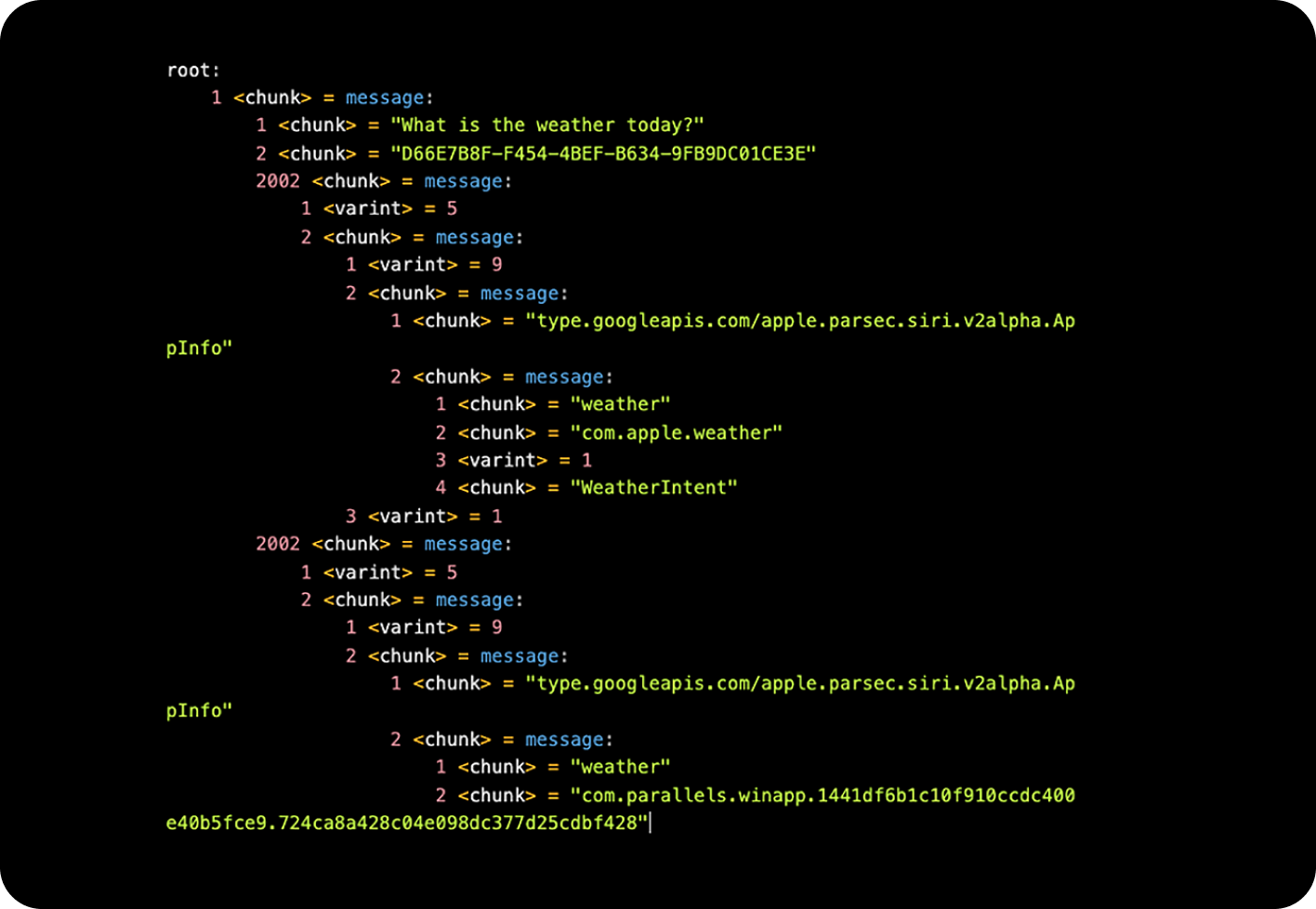

After extracting and decompressing the frame, the ProtoBuf-Inspector Python library was used to parse the ProtoBuf data. Without access to the original.proto files used to compile the data, the analysis required a best-effort approach to interpret the content.

Let’s see what we can find.

App Lists

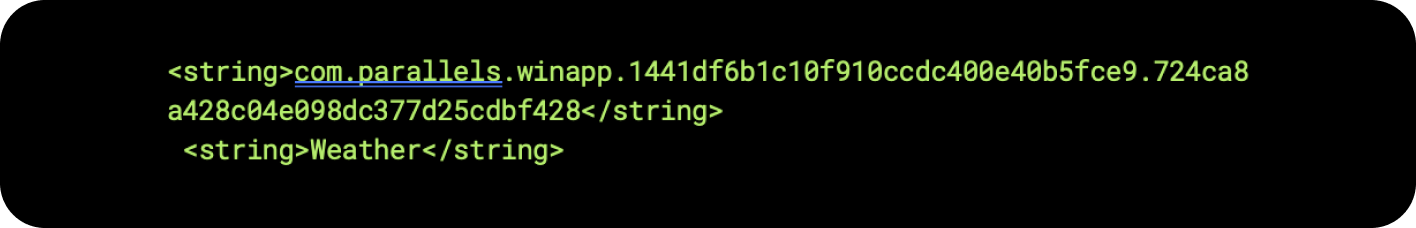

The first app listed was the official Apple Weather app, which was expected. However, the second one raised questions—it appeared to be related to Parallels, a virtual desktop Mac application that enables running the Windows operating system. A review of the Parallels settings on the device, including the applications running within the virtual machine, showed the following information:

This turned out to be the ID of the official Windows Weather app installed on the virtual machine.

Based on this observation, it appears that Siri scans the device for apps related to the prompt’s topic. To test this theory, a question about emails was entered, and the packet revealed a list of all email clients installed on the device.

So, it’s confirmed — Siri actively searches for apps on the device that match the topic of the prompt, and reports them back to Apple.

Precise Location

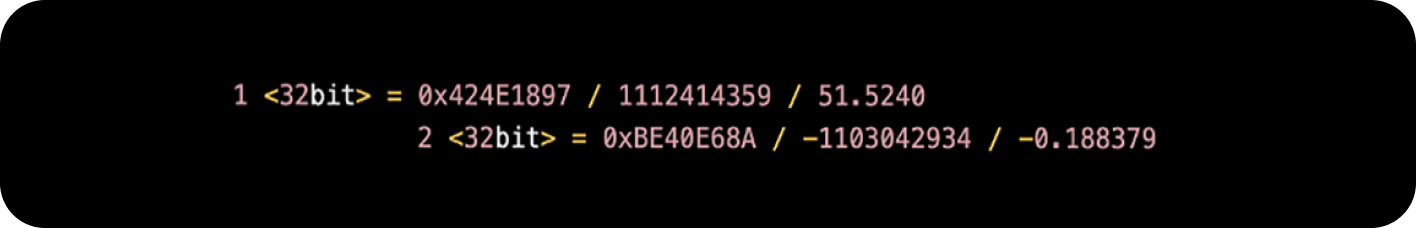

A question arises: how does Siri determine the weather without the location being mentioned in the prompt? A review of the ProtoBuf content revealed two numbers next to the apps list that appear to be coordinates.

A quick Google search confirmed this theory, showing the cafe I was working from.

According to Siri’s Privacy Policy (Notice it's Siri's privacy policy and not Apple Intelligence. More on that soon), they specifically mention this behavior. In fact, it is possible to disable location sharing information with Siri, but if you do consent to location sharing, your location will be appended to every request (regardless of the necessity).

Once again, personal information is being leaked. This raises the question of what else Apple knows about our habits.

Audio Metadata

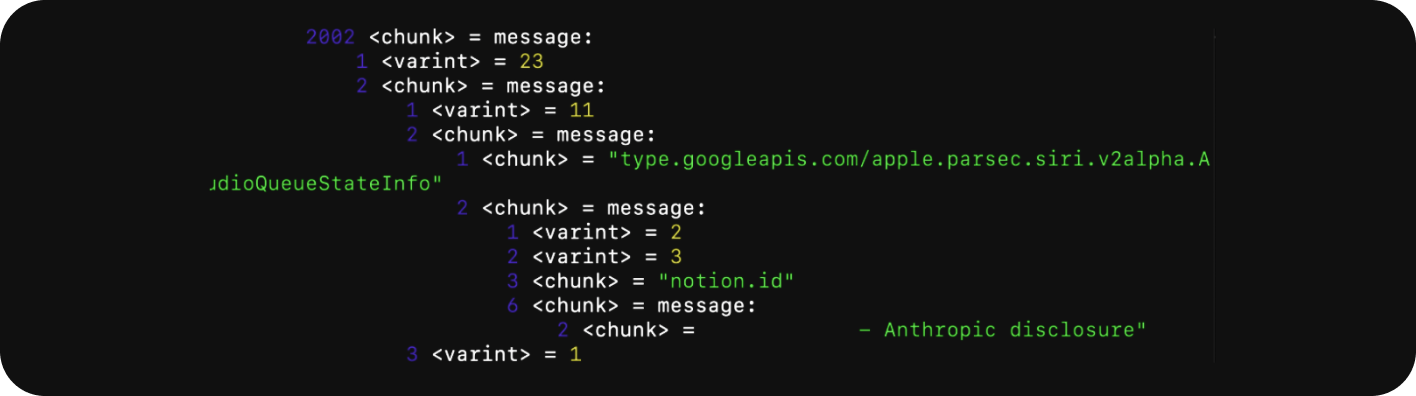

During the investigation of the weather request packet, the following content was encountered:

Initially, the appearance of a confidential document title from the Notion app in Siri’s traffic was surprising.

Once again, personal information is being leaked. This raises the question of what else Apple knows about our habits. We still have other traffic that we cannot see. Maybe Apple uses some pinning mechanism…

Pinned Traffic

What is Certificate Pinning

Certificate Pinning is an additional security measure used to protect against machine-in-the-middle (MITM) attacks and fraudulent certificates. Instead of relying solely on the Certificate Authority system, pinning allows a website or application to specify which exact certificate or public key should be trusted when connecting to a particular domain. This prevents attackers from tricking users into accepting a malicious certificate issued by a compromised or unauthorized CA.

Bypassing the Certificate Pinning

Under macOS Sequoia’s SSL pinning mechanism, each SSL context uses a dedicated verification routine. To bypass the pinning, we could modify the behavior to skip the verification. To achieve this, we must alter Apple’s built-in verification logic by hooking the relevant symbols in Siri’s process - assistantd.

Using Frida, we created this script to disable the pinning.

More Apps?

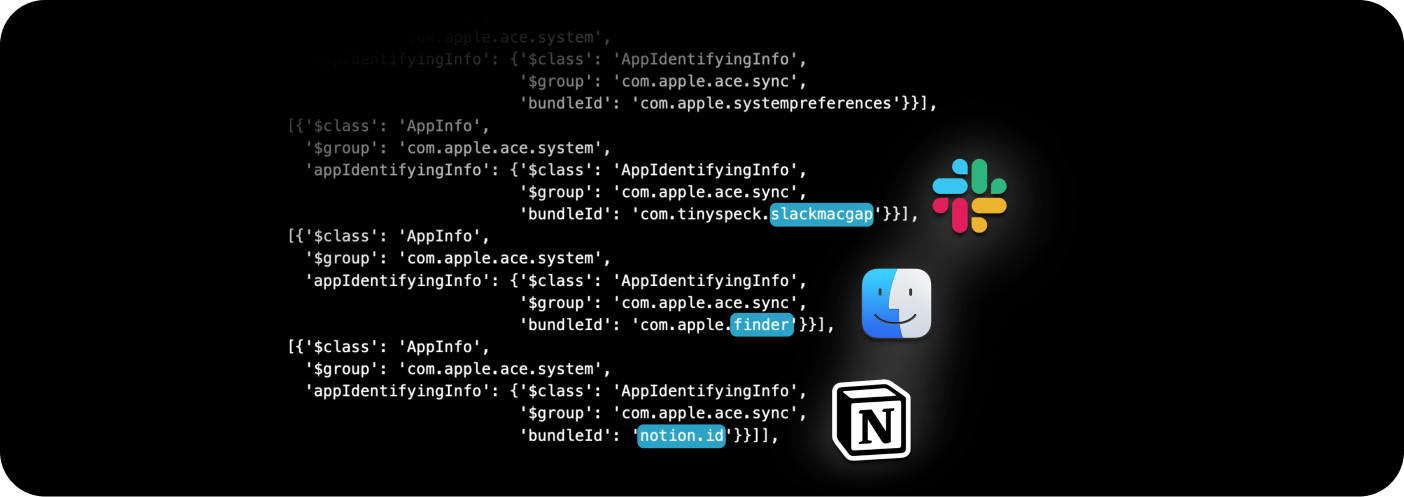

Among the recognizable strings in the ACE packet, many correspond to familiar application names, clearly indicating that these are the apps currently active on the device.

So, it’s cleared — Siri actively looks for open apps on the device, and reports them back to Apple’s servers.

End-to-End Encryption? I’m Not Sure

One of the main features of Siri is the ability to interact with different apps on the device, like summarizing notes, composing emails and more.

Siri has the functionality to send messages to contacts in WhatsApp. For example, you can ask Siri, by voice or text (Using Apple Intelligence), “Send the message Good morning to Bob” and it will send the message “Good morning” to a contact named Bob.

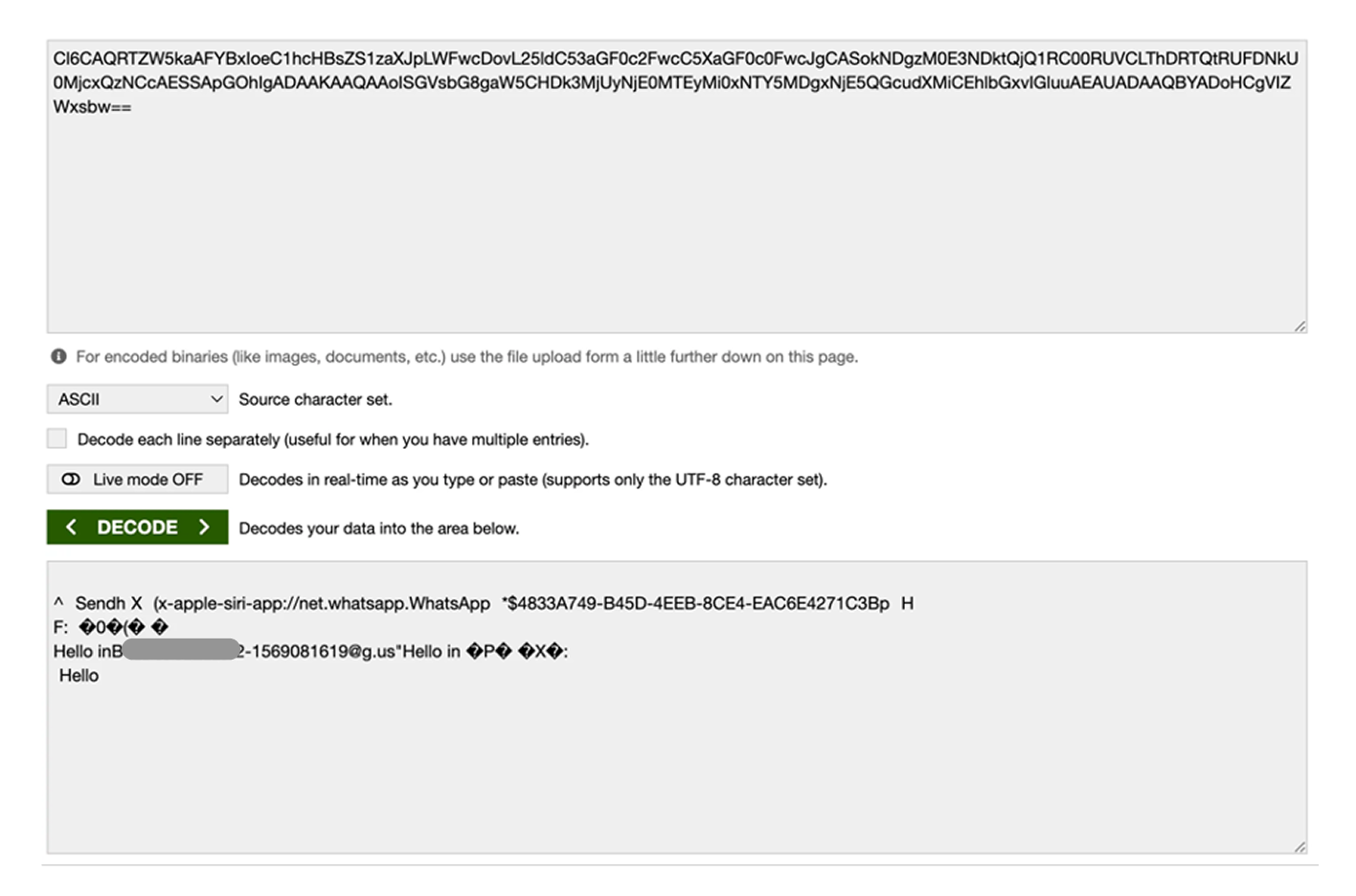

While recording the traffic of Siri while sending a message with Siri, a single, pinned packet is generated. Extracting it shows that it contains a base64 string. Decoding it revealed the following:

Siri indeed sends the content of the WhatsApp message, the recipient phone number and other identifiers back to Apple’s servers.

This raises a significant concern about WhatsApp’s end-to-end encryption, as the messages are apparently leaving the device even though they were sent from the device itself.

Initially, I suspected this might be due to the Siri and Apple Intelligence settings that allow it to “learn” from certain apps; however, the behaviour persists even when not permitting them to learn from WhatsApp.

However, sending a message to WhatsApp via Siri also works when blocking communication between Siri to Apple’s servers. The heavy processing already occurred (reasoning the target application is WhatsApp, who the recipient is, and the message to send) so why is it sent externally, and to a server that isn’t part of Private Cloud Compute? If all data resides locally, why is there any need to send it to their servers?

So, it’s clear — Siri has access to your WhatsApp messages sent via Siri (but not to messages sent outside of Siri), and reports them back to Apple’s servers, regardless of whether permission is granted for learning from them.

What Happens When It Comes to ChatGPT

Disclosure Process and Apple’s ResponseWe informed Apple of this privacy issue in February 2025. In March 2025, after some back and forth with their security team and sending additional information, Apple acknowledged the issue and said they will be working towards a fix.However, in July 2025, Apple reached out to say that this is actually not a privacy issue related to Apple Intelligence. Rather it’s a privacy issue related to the usage of third party services that rely on Siri. For example, the misuse of SiriKit (Siri’s extension that allows third party integrations to Siri) by WhatsApp.

Via Apple Intelligence, users can interact with ChatGPT through Siri and Writing Tools. For example, Siri can tap into ChatGPT to provide answers when that might be helpful for certain requests.

According to Apple, every request to ChatGPT is routed through Apple Intelligence’s Extensions service. This happens also if you use your private or business account.

However, when using Siri, these requests are duplicated: requests are sent to the extensions’s service and to Siri servers. The request to Siri’s servers is redundant for the user.

Disclosure Process and Apple’s Response

We informed Apple of this privacy issue in February 2025. In March 2025, after some back and forth with their security team and sending additional information, Apple acknowledged the issue and said they will be working towards a fix.

However, in July 2025, Apple reached out to say that this is actually not a privacy issue related to Apple Intelligence. Rather it’s a privacy issue related to the usage of third party services that rely on Siri. For example, the misuse of SiriKit (Siri’s extension that allows third party integrations to Siri) by WhatsApp.

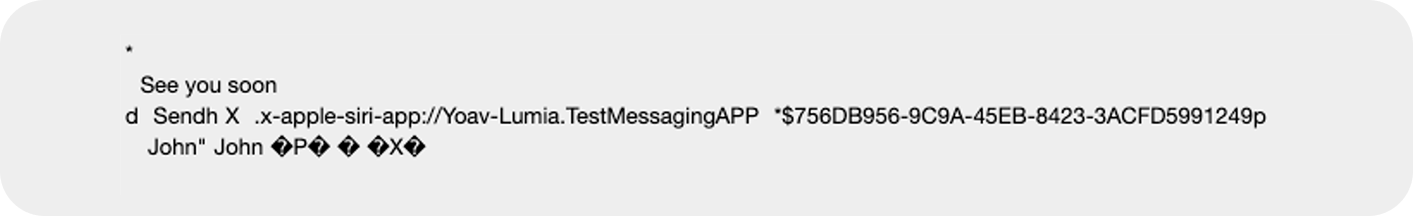

After a quick overview, we saw the same behavior on iMessage as that of WhatsApp when sending a message via Siri. So, before reaching WhatsApp on this issue I took this matter deeply. I built an app, straight from Apple’s documentation, that uses SiriKit with messaging integration, and I was amazed to see the same behavior also happening in this test.

Apple also explicitly mentioned that Siri’s servers are not part of Apple’s Private Cloud Compute. It seems that there are features that belong to Siri core and other features that are based on Apple Intelligence. The user, however, is unaware of which is used when.

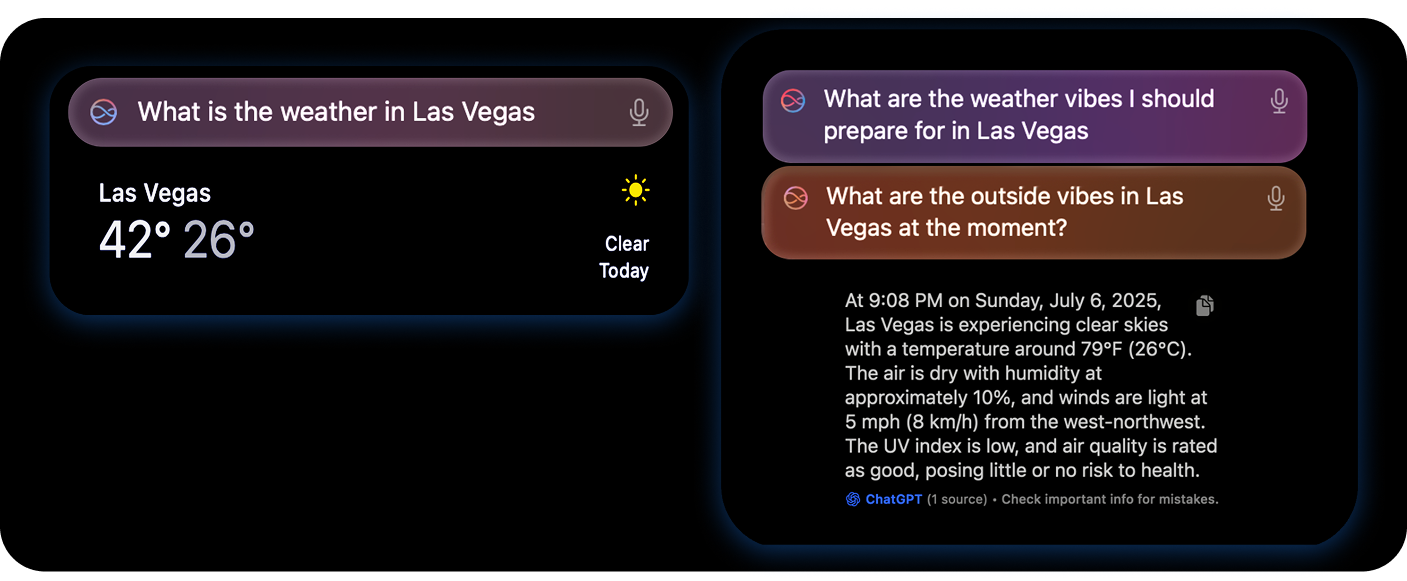

Two Privacy Policies, Two Practices, Same App

Herein the privacy issues become even further complex: when you dictate a message to Siri, two underlying flows might happen - you might be using Siri flows, but you may also be using Apple Intelligence flows. As a user, you have no idea if Siri will take on its flow, and when it will take on its Apple Intelligence flow.

For example, the query: “Hey Siri, what is the weather today?” will send the data to Siri servers. However, the query: “Hey Siri, ask ChatGPT what is the weather today?” will send the message to Apple Intelligence’s Private Cloud Compute.

Two similar questions, two different traffic flows, two different privacy policies.

Potential Mitigations

For organizations managing Apple devices, we recommend taking on a three-prong approach:

- Firewall and network restrictions on Siri domains

- Set your firewall to block any network traffic to Siri’s dictation domain, guzzoni.apple.com. This should not hinder any Siri functionality

- For stricter environments, setting your firewall to also block Siri’s search domain smoot.apple.com and its subdomains is also possible, though it will impair Siri capabilities.

- Apple device and knowledge sharing policies

- Disable any “Learn from this app” settings to be on the safe side.

- Network traffic analysis and monitoring

- Create a policy on AI usage for employees and place necessary controls for enforcement.

- Monitor your network traffic to gain visibility into AI usage across the organization. With this visibility, you can hone your controls to ensure compliance with the defined policy.

Why it Matters

On a smaller, personal scale, this means that sensitive data is leaving your Apple device unnecessarily, shattering your privacy expectations and giving you limited, perhaps even zero, control over your data.

For enterprises, it raises serious compliance and security risks when sensitive corporate information leaks outside the organization’s network.

This leads to a wider discussion, as this issue goes beyond just Apple and its incorporation of AI. AI capabilities are now all around us. Any typical app these days incorporates AI, whether it’s Grammarly, Canva or Salesforce. Knowing when a feature is powered by AI or not, is not really trivial anymore, and it requires understanding of the technical flow of the feature — something that a typical user has no time, skill or desire to do.

How Lumia Can Help

At Lumia, our mission is to help organizations adopt AI safely, responsibly, and governed — without disrupting productivity.

Apple Intelligence is just one aspect in the world of AI. However, the findings of our AppleStorm research highlight why visibility and control are essential in the age of AI-powered productivity tools. Lumia’s platform provides full visibility into AI-related data flows across thousands of applications and protocols, including hidden behaviors like those we uncovered in Siri.