AI agents are moving from experimentation to daily workflow. Tools like OpenClaw (previously known as ClawdBot, MoltBot) allow employees to deploy powerful, LLM-backed agents in minutes, without deep technical expertise. That accessibility drives rapid adoption, but it also introduces a new layer of enterprise risk.

For CISOs leaders, OpenClaw represents a structural shift in how automation is introduced into the enterprise. Unlike other SaaS platforms, it is an extensible agent framework that can operate inside, outside, and across the corporate boundary. It can execute actions, interact with internal systems, and transmit data to external AI providers. Understanding its architecture and risk surface is the first step toward responsible adoption.

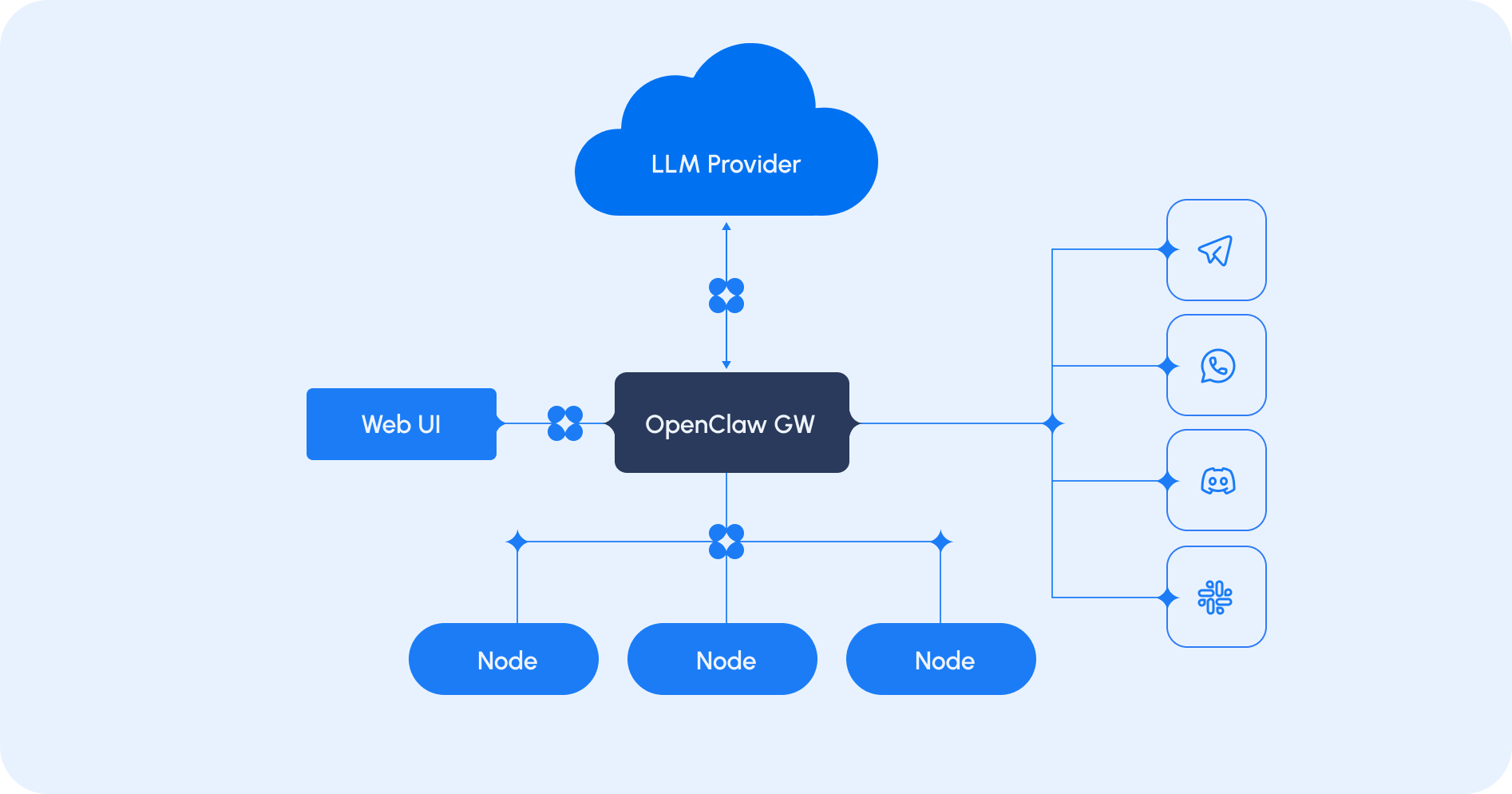

The OpenClaw Architecture

OpenClaw consists of four primary components:

1. The Gateway The gateway is the control plane. It manages tasks, skills, configuration, and command execution. It can be thought of as a wrapper around a large language model and must be configured to use a provider such as OpenAI, Anthropic, or a local LLM. From a security perspective, this is the operational brain of the system.

2. Web UI The web interface allows users to configure the agent, manage skills, and monitor execution. It provides accessibility and lowers the barrier to entry for less technical users.

3. Connectors Integration points that allow the agent to interact with internal systems, APIs, and external services.

4. Nodes Nodes can be installed on other machines or endpoints. They communicate with the main gateway and act as sub-agents. Nodes may run on laptops, servers, or even mobile devices.This modular design creates flexibility, but it also introduces multiple decision points that must be actively governed. The gateway, connectors, nodes, and LLM provider all represent potential data flows that must be understood and controlled.

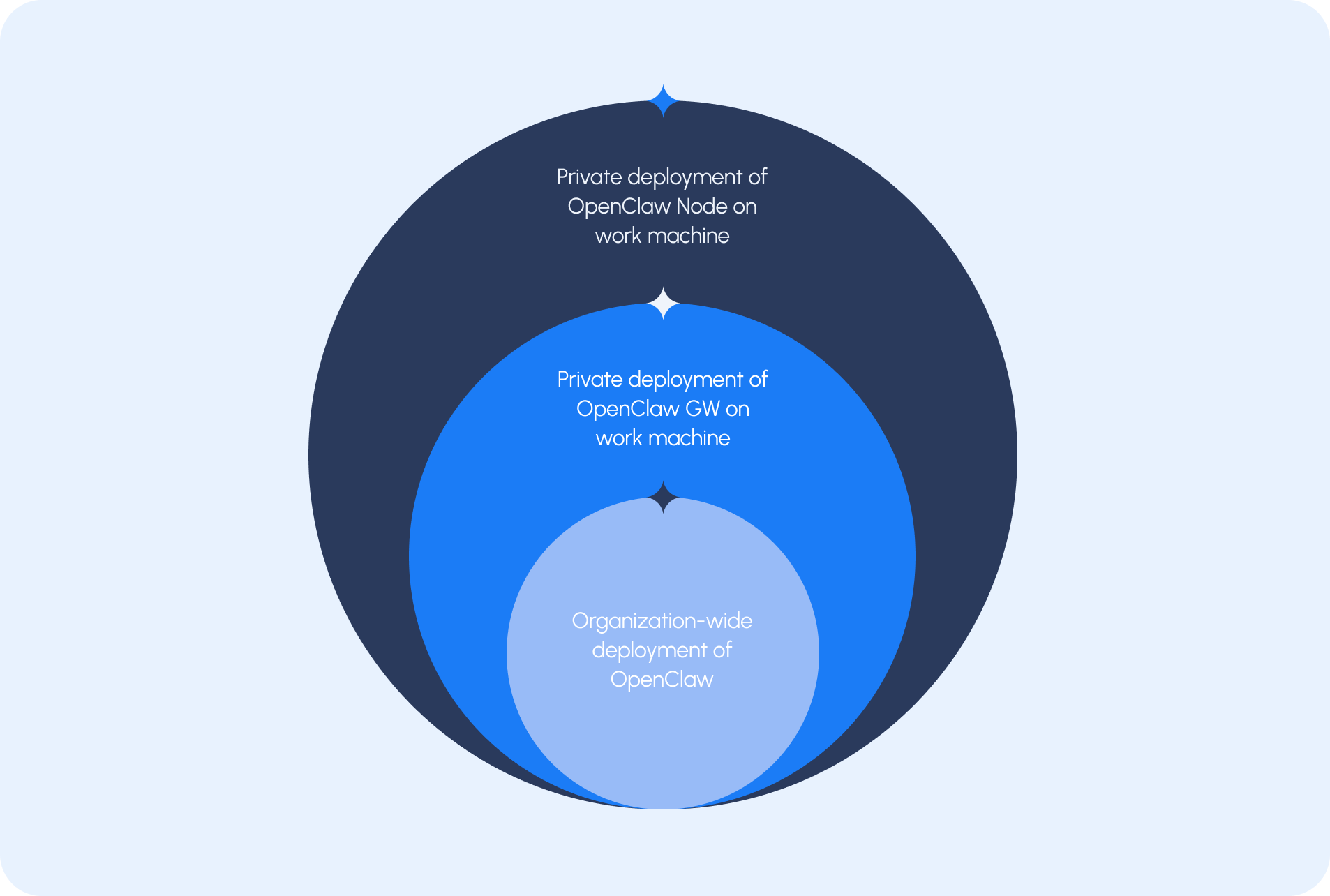

Enterprise Deployment Scenarios

Next, let’s explore a couple scenarios in which IT security teams might expect to encounter OpenClaw.

Scenario 1: Gateway and Node on a Work Device

In our first scenario, an employee installs OpenClaw directly on their work laptop with both the gateway and node running locally on the device. Commands are issued to the gateway through an external interface such as Telegram. Although the control channel is external, the execution environment is fully local. The agent can interact with files, applications, credentials, and services accessible from the workstation, and it communicates with an external LLM provider to process prompts and determine actions.From a security perspective, monitoring the Telegram channel itself is not feasible. It is typically accessed on personal, unmanaged devices that fall outside the organization’s monitoring scope. However, the gateway and node are running on a corporate asset, and outbound communication to the LLM provider represents a data egress path that must be considered.

Scenario 2: Node Connected to an External Gateway

An even greater-risk scenario occurs when an employee installs an OpenClaw node on a corporate device that communicates with an external gateway beyond the organization’s visibility and control.In this case, instructions originate externally, but execution occurs inside the enterprise. The external gateway may direct access to internal systems, APIs, or sensitive data. From a security standpoint, this is unmanaged automation operating within corporate infrastructure.

Scenario 3: Formal Organizational Deployment

In a structured deployment, the gateway runs internally, connectors are approved, and nodes operate under defined controls.This model introduces a known risk surface, but it also enables governance. Identity and access controls can be applied. Logging and monitoring can be centralized. Egress to LLM providers can be inspected and managed.When deliberately architected, OpenClaw can align with traditional security design. The key is visibility and control across every interaction.

It’s about Governing Usage and Actions

With agentic frameworks like OpenClaw, risk no longer lies only in what is shared, but in what is done. Furthermore, these systems are non-deterministic by design. The same prompt can produce different outputs, decisions, or actions depending on context, training data, or system state. Traditional security controls were built for predictable software and human judgment, not autonomous systems that interpret instructions and act with variability.OpenClaw is not a static application. It is an extensible framework that evolves through new skills, connectors, and automated actions. As agents gain the ability to access systems, invoke APIs, and execute workflows, the risk surface changes dramatically.Security programs must focus on governing agent behavior, which begins with observing how agents operate in practice. Organizations need insight into the actions agents are taking, the systems they are interacting with, and the downstream impacts of autonomous execution. Only with that understanding can enterprises determine whether those actions align with policy, permissions, and defined risk tolerance.But defining policy for autonomous agents presents a fundamental challenge: how do you govern behavior you don’t yet understand? AI systems are general purpose. The same agent can draft documentation and polish emails, or it can rm -rf your computer depending on who invokes it and why.Effective governance cannot rely on static rules alone. Intelligent policy decisions require context, including the user’s role, permissions, intent, and the actual meaning of the content being shared. Because AI systems are driven by natural language, governance must be capable of interpreting language itself, not just matching keywords or classifications. Without that semantic understanding, policies collapse into blunt controls that either overcorrect or miss material risk.

Control Planes and Choke Points in OpenClaw

One of OpenClaw’s defining characteristics is that communication between components typically occurs over WebSockets. This creates identifiable network choke points where security teams can gain visibility and apply controls:

Node to Gateway Traffic

Monitoring encrypted sessions between endpoints and gateway instances can reveal the presence of unmanaged nodes.

Web UI to Gateway Traffic

Enables auditing of tasks and commands. Integration with SIEM platforms supports anomaly detection and incident response.

Gateway to LLM Provider Traffic

The connection between the gateway and the LLM provider is the most significant exposure point, determining what data leaves the enterprise and how prompts are processed. Egress filtering, proxy inspection, and contractual review of LLM providers are critical components of governance.

Enabling Safe Adoption at Scale

OpenClaw is an early signal of what’s to come. AI agents are evolving from experimental tools into persistent assistants that operate across systems, make decisions, and take action on behalf of employees. This shift challenges long-standing security assumptions that were built around direct human control of predictable, deterministic applications.Many enterprises respond by trying to block agentic systems outright. In practice, this is more difficult than it appears. Agentic capabilities are embedded across tools and workflows, and the boundary of what constitutes an agent is increasingly unclear. The same application may behave like a passive assistant in one moment and an autonomous actor in the next.Even when blocking is possible, it offers only temporary relief. As agents become more deeply integrated into daily work, indiscriminate blocking creates friction and drives usage into unmanaged channels. Enablement is a far more sustainable path than elimination.Safe enablement begins with visibility into agent actions and the systems they touch. Enterprises must understand not only how agents are being used, but also which internal services, data stores, and external platforms they interface with before defining acceptable behavior.Organizations should move beyond coarse allow-or-block decisions and establish clear behavioral boundaries for autonomous systems. These include which systems agents may interface with, what actions they are permitted to take on those systems, the scope of permissions associated with each action, and the acceptable outcomes of those actions. Because user intent, context, and agent behavior can shift from one interaction to the next, these boundaries must be enforced dynamically in real time.When governed this way, agents can operate inside enterprise environments while preserving accountability, control, and trust.To see how your enterprise can govern agent actions safely and at scale, Contact Lumia today.